Bitmap to BitmapSource

I was using CreateBitmapSourceFromHBitmap to convert a GDI Bitmap to a BitmapSource for use in a WPF app, but the resulting images were colour "flattened" like they had a reduced palette. Web searches reveal about 4 different ways of doing the conversions, and they all did the same thing.

It turns out all the conversion code was working, but the ImageList providing the source images had the default ColorDepth=8Bit which was too small for the actual images. Removing and adding the ImageList back with 16Bit depth and adding the images back to its collection fixed the problem.

The first static method mentioned above works well, so ignore all the other ways of converting.

Incredible Exceptions

When starting the Web API project I started getting weird exceptions like "could load CoreLib.XmlSerializer" and "unexpected EOF or 0 bytes from the transport stream". I thought I had a terrible bug or HTTPS misconfiguration or mismatch in vital libraries and I could not locate where in my code these errors were coming from. I eventually realised I had turned off VS2022 Debugging > Just My Code and I was seeing exceptions deep inside the .NET runtime. I don't remember changing that setting, but ticking it on restored my previous calm debugging experience.

ASP.NET Core Out-of-Process

I deployed two ASP.NET Web API services to Azure in different virtual directories, one was .NET 5 and the other .NET 6. The second started with this error:

500.35 ANCM Multiple In-Process Applications in same Process

The worker process can't run multiple in-process apps in the same process.

I knew this was caused by the new In-Process feature of .NET Core, but it took almost an hour to find a way of reverting to the old behaviour by putting this line in the project files:

<AspNetCoreHostingModel>OutOfProcess</AspNetCoreHostingModel>

Stopping and starting the Azure App Service will allow both apps to run overlapping.

C# Records

I was so keen on the new C# 9 record types that I created a dozen of them in a new project because they save so much code. You can create a full featured struct or class like this:

public record MyThing(int Id, string Name, string? Note = null);

The trouble was, a week later I had to use the records in the request and responses of a Web API and I was quickly reminded that they are not serializable because they don't have empty constructors. I had to turn them back into normal classes.

Documentation claims that records work correctly with JSON serialization, so this issue is still being researched.

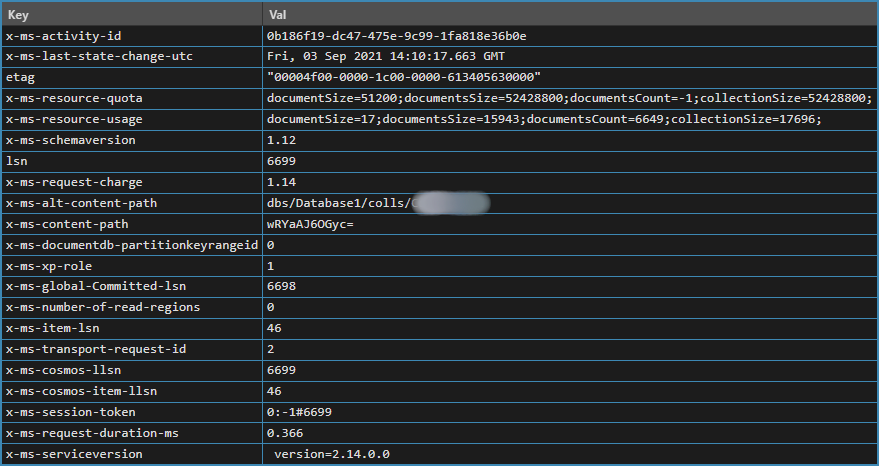

Cosmos DB LINQ Expression

After updating to the latest Microsoft.Azure.Cosmos 3.22.1 package (I forget the old version) I received a BadRequest response from a simple LINQ query. The problem was this where clause in the LINQ expression:

...Where(t => t.Pictures.Length == 0)

The array length comparison could not be transformed into a Cosmos SQL statement using ARRAY_LENGTH, it was generating some function named ArrayLength() which failed. I'm not sure if this is a bug in the LINQ provider (unlikely?) or mixed versions of something. The fix was to use this condition instead:

...Where(t => t.Pictures.Count() == 0)

Minimal Web APIs

Creating a Web API project requires a huge amount of repetitive ceremonial code, so I was quite thrilled to learn about the arrival of the minimal Web API in ASP.NET Core. An attempt to migrate an older controller-based project to the new format ended poorly. The actions are now performed by methods of the App (WebApplication Type) instead of methods in controller classes. The old project used a T4 template to generate dozens of method stubs, so that needed a complete refactor to get going.

The stumbling block was attempting to put a variety of custom attributes on the minimal methods. Some Swagger documentation can't be put on the minimal methods. Some filters aren't available either, and it took a while to stumble upon some web articles this list the shortcomings of minimal APIs.

So for now I consider them an interesting and useful experiment, but their limits should have been clearly documented. I'll return to considering migration once the shortcomings are plugged.

Validation and Nullable

After updating to Visual Studio 2022 and enabling nullable reference types I received a validation error response attempting to POST a class in the request body. I was not using any validation so it made no sense.

It turns out my class had a string id property which I was saving with a null value because it would be set on the server side. So unexpected and unwanted validation was happening silently. A crude but quick workaround was to define a special string UnsetId = "_UNSET_ID" which replaced the null value.

Minimal Web API Authorization

In a controller-based Web API project you can define an Attribute which implements IAuthorizationFilter and intercept every request, inspect the headers for the presence of a valid key. In a minimal API there are no methods to put attributes on, so you have to use a middleware class and intercept the requests in the InvokeAsync method. There are many samples available online.

If you use context.Response.WriteAsync(string) like the samples then you will get an empty response body. I found a call to context.Response.WriteAsJson(string) was needed to get a body.

Minimal Web API no XML

Extension method AddXmlSerializerFormatters doesn't seem to add automatic XML support as it does in controller based projects. One web post pointing to this line of code hints that only JSON is supported.

More to come ... I'm in the middle of renovating a large project suite while moving it up to .NET 6, and I'm stumbling over many weird 'gotchas' that need documenting for posterity.